During Open Fields conference Adnan presented “after.video – displaying video as theory and reference system”:

360º Video and Interactive Storytelling

Aigars CEPLITIS / Luis BRACAMONTES / Adnan HADZI / Arnas ANSKAITIS / Oksana CHEPELYK

Venue: The Art Academy of Latvia

Moderator: Chris HALES

Aigars CEPLITIS. The Tension of Temporal Focalization and Immersivity in 360 Degree 3D Virtual Space

The fundamental raison d’être for the productions of immersive technologies is the attainment of an absolute psychosomatic and physical embodiment. The impasse, however, for audiovisual works shot in 360° space, is that their current schemata as well as their visual configuration oppose the very type of an experience it strives to deploy. To crack the code of narrative design that would render 360° films to offer a truly immersive experience, a number of 360° video prototypes have been created and tested against the backdrop of Seymour Chatman’s narrative as well as Marco Caracciolo’s theories of embodied engagements in order to assess the extent of immersion in a variety of 360° narrative setting, zooming in on summary, scene, omission, pause, and stretch. Such prototype simulation is further followed by testing audiovisual plates whose micronarratives are structured in a rhizomatic pattern. Classical films are edited elliptically, although cut and omission are demarcated in cinema, with cut being an elliptical derivative, and in favor of using freeze frames to pause for a pure description. In 360° cinema, in turn, omission, cut, and pause, do not operate properly; its cinematic preference for here and now creates an inherent resentment to montage. Singulative narrative representations of an event (describing once what happened once) remains the principal core in spherical cinema, with repetitive representations deployed rarely, merely as special effects, or as a patterning device in flashbacks or thought-forming sequences through the post-digital editing style. The repetitive sequences in 360° become particularly disturbing, when their digital content is viewed, using VR optical glasses, instead of desktop computers, and such contrasts answer more fundamental questions as to whether montage is detrimental in 360° film, what types of story material and genre are more suitable for 360° cinema, and how do we gage the level of embodiment. Finally, the residual analysis of the before mentioned prototype simulation brings to the fore the rhizomatic narrative kinetics (the fusion of the six Deleuzoguattarian principles with the classic narrative canons), that should become, de facto, the language of 360°, if the embodiment is to be the key.

Biography. Aigars Ceplītis is the Creative Director of Audiovisual Media Arts Department at RISEBA University, where he teaches Advanced Film Editing Techniques and Film Narratology. He is also a PhD candidate at New Media MPLab, Liepaja University, where he is investigating novel storytelling techniques for 360 degree Cinema. Aigars has been working as a film editor in feature films “The Aunts”, “The Runners”, “A Bit Longer”, “Horizont”, and on 20 TV miniseries “The Secrets of Friday Hotel”. He has formerly served as an office manager and film editor for Randal Kleiser, an established Hollywood director best renown for hits such as “Grease” and “Blue Lagoon”. While in Los Angeles, Aigars headed the program of film and video for disadvantageous children of Los Angeles under the auspices of Stenbeck Family, the owners of MTG. Aigars holds an M.F.A. in Film Directing from California Institute of the Arts and B.A. in Art History from Lawrence University in Wisconsin.

Luis BRACAMONTES. Teleacting the story: User-centered narratives through navigaze in 360º video

This research explores the possibilities of a user-centered narrative strategy for 360º video through a new feature called “navigaze”. Navigaze is a feature introduced by the swedish Startup “SceneThere”, and it allows a controlled level of agency in the storytelling that borderlines between gaming and film. “Teleaction” here is understood from Manovich’s perspective of “acting over distance in real time” as opposed to “telepresence” implying only “seeing at a distance”. Immersive storytelling for Virtual Reality and 360º video presents a new challenge for creators: The dead of the director. At least in the traditional way as seen in other mediums such as theater or film. As its frameless quality and highly active nature demands for a fluid and flexible narrative.

Navigaze allows the user to inhabit a story instead of just witnessing it. By including a space-warp feature reminiscing to Google Street View, users can explore the “virtual worlds” and unravel pieces of the story on their own by gazing at the blue hotspots that transports them to the next location within the same world. Thus, the story constitutes a series of pieces of a puzzle that every person can put together as they want creating a unique narrative experience, similar to Julio Cortázar’s game-changing novel “Hopscotch” (1963).

Focusing on two pieces by SceneThere, “Voices of the Favela” (2016) and “The Borderland” (2017), this research delves into the evolution of storytelling on VR and 360º video and the early stages of the creation of their own narrative language.

Biography. Luis Bracamontes is a narrative designer and writer, specializing in Storytelling for VR and AR. He is an intern in the Virtual Reality start-up, “VRish” in Vienna. And he is currently studying an Erasmus Mundus Joint Master Degree in “Media Arts Cultures”, between Danube University Krems, Aalborg University and the University of Łódź. He has a B.A. in Communication Sciences with a specialization in Marketing and has worked for over 6 years in performing arts and literature. In 2014, he was awarded the “Youth Achievement Award for Art & Culture”, an honorary award given to the most promising and active youths with an outstanding trajectory in art and culture given by the City Hall of Morelia, for the work of his production company “Ala Norte”. Since 2015, he is a member of the Society of Writers of Michoacán (SEMICH). In 2016, he worked as an innovation and marketing consultant in the VIP Fellowship by Scope Group and the Ministry of Finance of Malaysia, in Kuala Lumpur. His recent research includes a paper on hybrid VR Narratives supervised by Oliver Grau, and a research project on post-digital archive experiences supervised by Morten Sondergaard, and presented at the NIME (New Interfaces for Musical Expression) International Conference 2017 in Copenhagen.

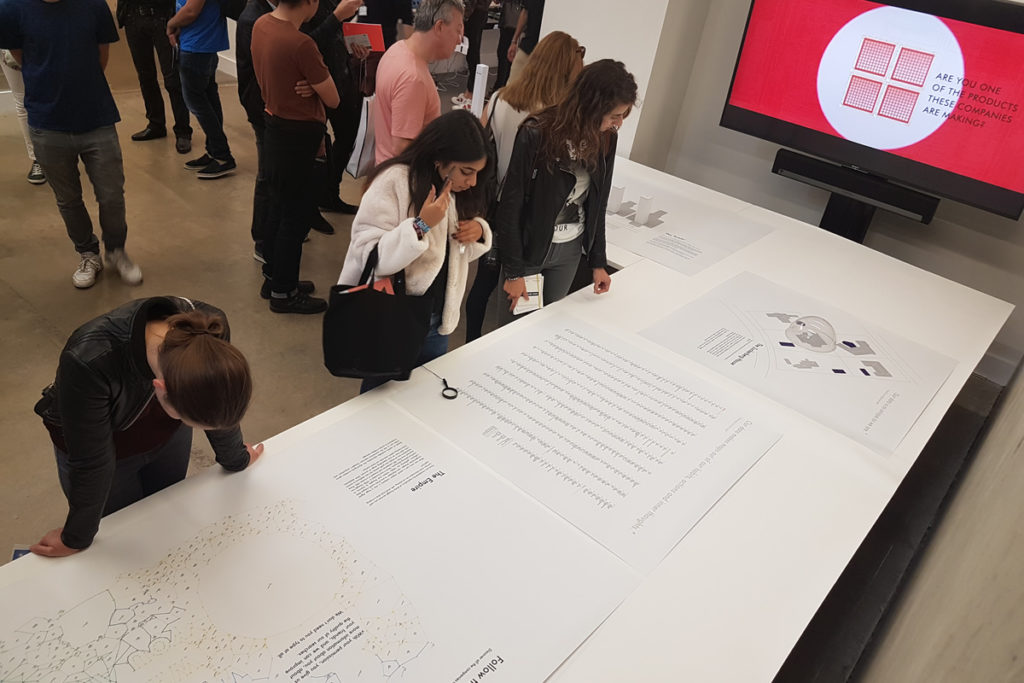

Adnan HADZI. after.video – displaying video as theory and reference system

After video culture rose during the 1960s and 70s with portable devices like the Sony Portapak and other consumer grade video recorders it has subsequently undergone the digital shift. With this evolution the moving image inserted itself into broader, everyday use, but also extended it ́s patterns of effect and its aesthetical language. Movie and television alike have transformed into what is now understood as media culture. Video has become pervasive, importing the principles of “tele-” and “cine-” into the human and social realm, thereby also propelling “image culture” to new heights and intensities. YouTube, emblematic of network-and online-video, marks a second transformational step in this medium’s short evolutionary history. The question remains: what comes after YouTube?

This paper discusses the use of video as theory in the after.video project (http://www.metamute.org/shop/openmute-press/after.video), reflecting the structural and qualitative re-evaluation it aims at discussing design and organisational level. In accordance with the qualitatively new situation video is set in, the paper discusses a multi-dimensional matrix which constitutes the virtual logical grid of the after.video project: a matrix of nine conceptual atoms is rendered into a multi-referential video-book that breaks with the idea of linear text. read from left to right, top to bottom, diagonal and in ‘steps’. Unlike previous experiments with hypertext and interactive databases, after.video attempts to translate online modes into physical matter (micro computer), thereby reflecting logics of new formats otherwise unnoticed. These nine conceptual atoms are then re-combined differently throughout the video-book – by rendering a dynamic, open structure, allowing for access to the after.video book over an ‘after_video’ WiFi SSID.

Biography. Dr. Adnan Hadzi has been a regular at Deckspace Media Lab, for the last decade, a period over which he has developed his Goldsmiths PhD, based on his work with Deptford.TV. It is a collaborative video editing service hosted in Deckspace’s racks, based on free and open source software, compiled into a unique suite of blog, cvs, film database and compositing tools. Through Deptford TV and Deckspace TV he maintains a strong profile as practice-led researcher. Directing the Deptford TV project requires an advanced knowledge of current developments in new media art practices and the moving image across different platforms. Adnan runs regular workshops at Deckspace. Deptford.TV / Deckspace.TV is less TV more film production but has tracked the evolution of media toolkits and editing systems such as those included on the excellent PureDyne linux project.

Adnan is co-editing and producing the after.video video book, exploring video as theory, reflecting upon networked video, as it profoundly re-shapes medial patterns (Youtube, citizen journalism, video surveillance etc.). This volume more particularly revolves around a society whose re-assembled image sphere evokes new patterns and politics of visibility, in which networked and digital video produces novel forms of perception, publicity – and even (co-)presence. A thorough multi-faceted critique of media images that takes up perspectives from practitioners, theoreticians, sociologists, programmers, artists and political activists seems essential, presenting a unique publication which reflects upon video theoretically, but attempts to fuse form and content. http://orcid.org/0000-0001-6862-6745

Arnas ANSKAITIS. The Rhetoric of the Alphabet

Through practice and research I aim to reflect on the connections between language, perception, writing and non-writing.

Jacques Derrida wrote in his seminal book Of Grammatology: “Before being its object, writing is the condition of the episteme”. I am curious – to what extent a written text still is (or should be) the condition of knowledge in artistic research? Does artistic research in general belong to and depend on this understanding of science? Would it be possible to do research without writing? How then one could share findings and outcomes of such research with the public and other researchers?

Writing interests me not only in the context of language, but also from the position of handwriting. How did letters of the alphabet emerge? It seems they were shaped by a human hand. What would letters look like, if they were written not on a flat sheet of paper, but in simulated three-dimensional space? In an attempt to answer the self-imposed question, I have created 3D models of cursive letters and exhibit them as video projections. In each visualization an imaginary writing implement produces an uninterrupted trace – a stroke on the writing plane. On this digitally-simulated stroke – the projection plane – a stream of texts and images is being projected.

I will attempt to combine two sides of artistic research (practice and theory) through writing – a writing system as an art project. Part of the doctoral thesis could be written and presented using this system.

Biography. Arnas Anskaitis (1988) is a visual artist, a lecturer and a PhD student at the Vilnius Academy of Arts. He employs a variety of media in his work, but always starts from a direct dialogue with the site and context in which he is working. His work has been shown at the Riga Photography Biennial (2016); National Art Museum of Ukraine, Kiev (2016); 10th Kaunas Biennale (2015); Contemporary

Oksana CHEPELYK. Virtual Reality and 360-degree Video Interactive Narratology: Ukrainian Case Study.

The aim of this thesis is to present some Ukrainian initiatives developing VR and 360-degree Video Interactive filmmaking: SENSORAMA in Kyiv and MMOne in Odesa. SENSORAMA as AN IMMERSIVE MEDIA LAB of VR reality grow VR | AR ecosystem in Ukraine by supporting talents with infrastructure, education, mentorship and investments.

An interactive documentary «Chornobyl 360», created by the founders of Sensorama Lab, filmed in spherical view of 360 degrees about Chernobyl Nuclear Power Plant, which was the site of Chernobyl disaster in 1986, is now proven to be in demand on the global market. The immersive technologies are used to change human experience in the fields that matter to millions: VR therapy research, healthcare etc. SENSORAMA is based in UNIT.city, a brand new tech park in Kyiv.

The company MMOne from Odesa has created the world’s first three-axis virtual reality simulator, in the form of chair attached to an industrial robot-like arm that moves in response to the action in a video game called Matilda. MMOne hopes the invention takes the global gaming industry in some entirely new directions. The startup debuted Matilda in October 2015 at Paris Game Weeks in France, presenting its device in cooperation with multinational video game developer Ubisoft, which created a racing game especially for Matilda called “Trackmania.” Since the Paris games exhibition, MMOne has had several big companies from the U.S. IT community ask to try out their chair, like Youtube, the Opera Mediaworks, world’s leading mobile advertising platform, Facebook’s Instagram, and Oculus LLC.

Biography. Dr. Oksana Chepelyk is a leading researcher of The New Technologies Department at The Modern Art Research Institute of Ukraine, author of book “The Interaction of Architectural Spaces, Contemporary Art and New Technologies” (2009) and curator of the IFSS, Kiev. Oksana Chepelyk studied at the Art Institute in Kiev, followed a PhD course, Moscow, Amsterdam University, New Media Study Program at the Banff Centre, Canada, Bauhaus Dessau, Germany, Fulbright Research Program at UCLA, USA. She has widely exhibited internationally and has received ArtsLink1997 Award (USA), FilmVideo99 (Italy), EMAF2003 Werklietz Award 2003 (Germany), ArtsLink2007 Award (USA), Artraker Award2013 (UK). Residencies: CIES, CREDAC and Cite International of Arts in Paris (France), MAP, Baltimore (USA), ARTELEKU, San Sebastian (Spain), FACT, Liverpool (UK), Weimar Bauhaus (Germany), SFAI, Santa Fe, NM, (USA), DEAC, Budva (Montenegro). She was awarded with grants: France, Germany, Spain, USA, Canada, England, Sweden and Montenegro. Work has been shown: MOMA, New York; MMA, Zagreb, Croatia; German Historical Museum, Berlin and Munich, Germany; Museum of the Arts History, Vienna, Austria; MCA, Skopje, Macedonia; MJT, LA, USA; Art Arsenal Museum, Kyiv, Ukraine; “DIGITAL MEDIA Valencia”, Spain; MACZUL, Maracaibo, Venezuela, “The File” – Electronic Language International Festival, Sao Paolo, Brazil; XVII LPM 2016 Amsterdam, Netherlands.

Art Centre, Vilnius (2014); 16th Tallinn Print Triennial (2014); National Gallery of Art, Vilnius (2012); Gallery Vartai, Vilnius (2012), and in other projects and exhibitions.

The MAZI Toolkit is made up of three elements.

The MAZI Toolkit is made up of three elements. In addition, you can directly access the Toolkit guidelines on GitHub, which includes up-to-date documentation: https://github.com/mazi-project/guides/wiki

In addition, you can directly access the Toolkit guidelines on GitHub, which includes up-to-date documentation: https://github.com/mazi-project/guides/wiki