Over 40,000 people visited The Glass Room in London and New York City. Hear how Tactical Tech and Mozilla are using immersive art to inform people about data security and privacy.

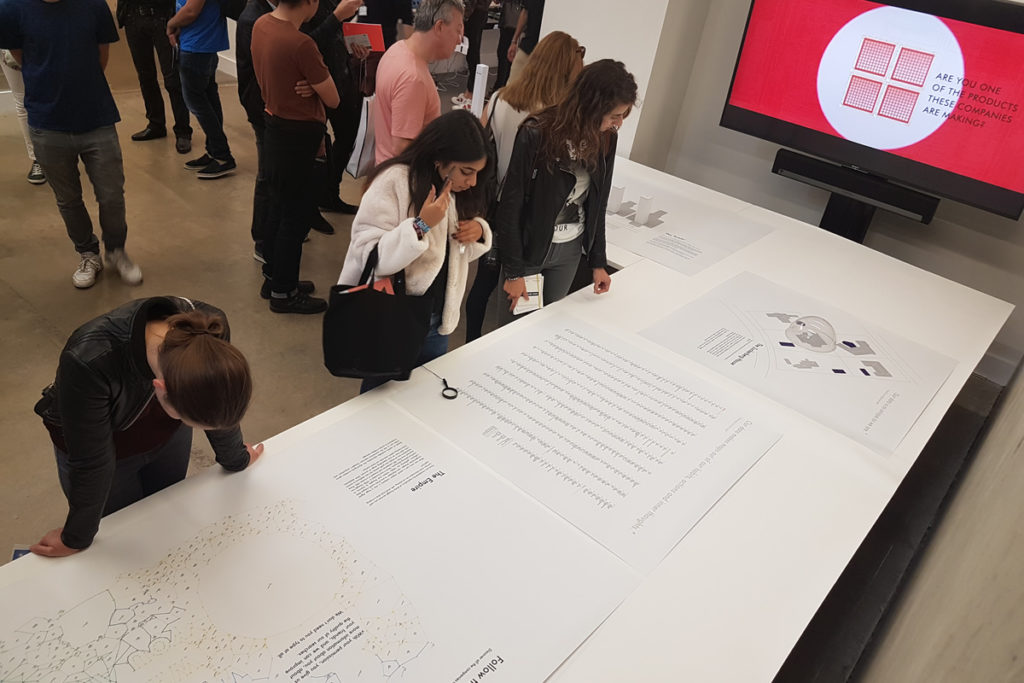

The Glass Room is an immersive, hands-on art experience that teaches people about personal data.

During two exhibitions, in New York City and London, over 40,000 people learned about how personal data is gathered, how it’s used, and the myriad types of data companies, governments, organisations and others access. A collaboration between Tactical Tech and Mozilla offers lessons in communicating about technical issues like data and privacy. It also provides insights into innovative public engagement strategies.

For a pedestrian on a busy street of London or New York, The Glass Room is a pop-up “tech store with a twist.” At first glance, it seems to offer the latest in shiny digital consumer products, such as the newest tablet or fitness tracker. But as you go inside, you find there’s nothing for sale. Instead, as you explore you’ll find a selection of art works exploring who is collecting our personal data and why, and what we can do about it.

The Glass Room brings together art, technology and the aesthetics of consumer retail. Visitors are presented with political questions about the data we share online with or without our knowledge. The exhibition creates a welcoming and accessible space that enables visitors to question their own assumptions about their digital lives and data, and to explore difficult and suppressed questions about their online activities.

A Glass Room tour. Photo by David Mirzoeff.

Tactical Tech has partnered with Mozilla to open Glass Room exhibitions in New York and London in the two years since opening “The White Room” in a larger exhibition at the Berlin Haus der Kulturen der Welt.

Following The Glass Room New York, the New York Times reported:

“To move through the Glass Room…was to be reminded of the many ways we unwittingly submit ourselves and one another to unnecessary surveillance, with devastating consequences… I left the Glass Room invigorated by the ways artists are exploring the dark side of our digital footprint.”

Tactical Tech has worked on data and privacy for some years. We’ve seen many public awareness campaigns on data, privacy and surveillance, struggle to make a significant impact.

Putting together the Glass Room, we saw an opportunity to work with artists and their work to lead people outside their comfort zone and test their assumptions. By creating an immersive space we were able to test different forms of engagement through art objects, animations and products visitors could take away, such as our Data Detox Kits.

People interacting at the Data Detox Bar. Photo by David Mirzoeff.

With The Glass Room we hoped we could bring debates and discourse highly prevalent in academia and technology activism to a broader audience. In a sense we hoped to fill the perception gap between niche discussions and issues on technology and popular media depictions of technology, such as Black Mirror, by presenting abstract and often speculative issues as a real-life, tangible, and even tactile, experience.

It turned out to be a timely intervention as large-scale data harvesting conversations enter more mainstream discourse. We challenged the audience’s willingness to engage with a broader and deeper reflection about the “quantified society,” the impact all-pervasive data sharing is having on our public sphere – transport, health, education – as well as on our sense of ourselves.

Following the success of the White Room in Berlin, we worked closely with Mozilla, artists, and designers from an experiential agency to expand and develop the concept to work in a retail context.

In both cities before opening, we launched advertising campaigns on billboards, in subway stations and online.

The exhibit was open for around three weeks in each city and over that period visitor numbers kept growing. By the final weekend in London we had reached our capacity inside and queues formed down the road.

The results: over 40,000 visitors attended, and there has been widespread media coverage, including articles in the New York Times, Channel 4 News, the BBC, New Scientist, Vogue and tech media such as The Verge. Social media activity was also sparked; for example, a Facebook Live event attracted over 47,000 viewers.

Audiences have been diverse. The majority of the visitors were ordinary passersby,who were either drawn in when walking past or heard about the exhibition from word of mouth. We attracted tourists, hipsters on the way to the cinema, families on a day out or simply people wandering in while shopping.

For the exhibits, we partnered with local artists to curate art works to add context and relevance so that the facts about how our personal data is being collected and used could come alive for non-technical visitors. We also installed a “Data Detox Bar” – at the back of the space in New York and right in the centre of the store in London – where people could pick up a “Data Detox Kit,” our easy 8-day guide to a digital makeover. We also created dedicated event spaces where we curated programmes involving activists, technologists, journalists, and some of our partner artists, where their own work around data and privacy was presented and discussed.

Glass Room visitors, 2017. Photo by Alistair Alexander.

Critically, for both cities, we recruited and trained a local group of “Ingeniuses” from diverse backgrounds and communities. Many had no experience in technology or privacy but after a four-day training camp, had enough knowledge to give privacy help and advice. They were a constant and welcoming presence in the space, engaging visitors in conversations, providing explanations, and even offering some recommendations when such were sought. They also led workshops, free and open to everyone and to all levels of technical knowledge, with titles such as “WTF – What the Facebook,” “Mastering your Mobile” and “DeGooglize your Life.”

The exhibitions sparked a depth of engagement rarely seen in awareness-raising campaigns. Many visitors stayed for an hour or more; some stayed for an entire day to take free workshops. Lots of people came back for repeat visits, often bringing with them friends or family.

The Glass Room was open for around 3 weeks in both cities and over that period visitor numbers kept growing. By the final weekend in London we had reached our capacity inside and queues formed down the road.

In New York and London visitors filled out over 840 feedback cards. Some typical feedback:

After visiting the Glass Room I feel…

- As if I’m finally accessing the vault control room. Shocked, enlightened, provoked.

- Happy someone is showing us how our data is being used.

- Interested in technology and data. I want to study technology and data. (from an eight-year old youngster)

- Glad there is so much research keeping watch on corporate surveillance.

- My mind has been opened.

- Healthily paranoid.

After visiting the Glass Room I want…

- To cleanse my online life and move away from Google.

- To know more. It is not about becoming paranoid, it’s about being more prepared.

- To get involved in protective steps to recover privacy for all.

- To be more creative how I talk to people about privacy and security.

- To talk to human beings more than ever.

A child at interactive Glass Room exhibit in London. Photo by David Mirzoeff.

What we learned

What can be taken away from this project? We think at least these things:

By setting up an exhibition in prime shopping locations, we were able to take issues of data and privacy to where people are, rather than hoping they‘d come to us.

By using art to explore these topics, we were challenging peoples’ assumptions in ways a conventional narrative can never achieve, and we were opening avenues for further enquiry.

By mirroring the design cues of tech stores, we used a visual language that everyone understands, and thus we were able to attract people who might well be put off by an art exhibition.

By taking the issues off line and creating an immersive physical space that was free and open to everyone, we created a public spectacle – an inclusive space where the experience of discovering these curious objects was shared with others, making the whole experience memorable and impactful.

And perhaps most crucially, by having a team of Ingeniuses who were from the local communities, we made the exhibition a vibrant, warm and human space – where visitors always had someone they could talk to.

Challenges

Of course, putting on a project of this scale has its limitations and challenges.

Renting prime retail locations and launching outdoor advertising campaigns doesn’t come cheap and we – like most small non-profits would – needed additional support. We were lucky that Mozilla proved to be the perfect partner. Not only did they have resources to do the project justice, but as the project progressed it became clear that their objectives and values were closely aligned with ours, and they gave us the freedom and support to develop the concept as far as we could.

Even with such an ideal partner though, an exhibition like this can only be temporary and will only reach a limited number of people in a very specific location. So we need to figure out ways of reaching more people, in more places, at substantially less cost.

Easier said than done, but we’re working on it. Over 2017 we developed a portable version of the exhibition, the Glass Room Experience. This kit recreates some of the objects from the main exhibition as 3D cardboard shapes and posters. We trialed this at around 10 events in Europe as we iterated the design. The kit development, production and distribution cost only a fraction of the main exhibition. And it provides small organisations and groups with materials that they can use to help explain these issues to their communities.

Although far smaller, the effect we have achieved on Experience visitors – over 7,000 of them to date – we believe, has been highly positive and has allowed us to take the Glass Room to places and people who would never see the full Glass Room.

The future

We are working again with Mozilla to produce a new edition of the “Internet of Things” that will be distributed to 75 events and organisations around the world in 2018. At the same time, we plan to find “multiplier” organisations that can print, distribute and support dozens of Glass Room Experienceevents themselves – allowing us to reach far beyond what our own capacity would allow.

The Glass Room experience kit development, production and distribution does have a cost, but it is a fraction of the main exhibitions. And crucially, The Experience provides small organisations and groups with materials that they can use to help explain these issues to their communities.

We’ll also be working with larger events and festivals to produce a mobile version of the exhibit, Glass Room Plus, which will be entirely self-funded. And we’ll be looking at developing new versions for schools and young people, libraries and universities, among many other audiences.

We’ll be looking at developing our self-learning resources online, so people can access more structured approaches to improving their online privacy.

We may yet open a major Glass Room pop-up store in another major world city in the future, but for now we’re working on promising avenues that can take Glass Room themes and narratives to yet new places and contexts: a hack lab in Lagos, a conference in Taipei, a crypto-rave in Brazil.

So the Glass Room project continues to evolve and develop. Indeed, it may be coming to you in 2018 – wherever and whoever you are. And if it’s not coming anywhere near, please get in touch – you could be the perfect Glass Room Experience host.

This story was written by Alistair Alexander of Tactical Tech. Alexander helped develop and implement The Glass Room project.

Top photo of Glass Room storefront by Nousha Salimi.

Visit the Glass Room London virtually, in WebVR

This past week, Mozilla and Tactical Tech launched The Glass Room in London. This “store” is actually an exhibition that disrupts how we think about technology and encourages people to make informed choices about their online lives. Now, anyone can experience the exhibit online and in real life.

Like a Black Mirror episode come to life, The Glass Room prompts reflection, experimentation and play. At first glance, it offers the latest in shiny digital consumer products, such as the newest tablet, fitness tracker or facial recognition software. But as visitors go inside, they find there is nothing for sale.

A closer look at the ‘products’ reveals works of art that peek behind the screens and into the hidden world of what happens to user data. The ‘Ingenius’ team is on hand to answer questions raised by the exhibit, engaging the public in conversation and helping them with alternatives, privacy tips and tricks.

This 360° view will take you deep inside the space and allow you to experience the exhibit everyone in London is talking about.

As a pioneer in WebVR technology, Mozilla believes this is an excellent opportunity to make this unique experience available on the web to everyone, everywhere, without the need to install an app or proprietary VR software. You simply click on the link and enjoy.

If you have an Oculus Rift or HTC VIVE, you can click on the VR icon to launch the Glass Room experience in WebVR. You can then immerse yourself in everything The Glass Room has to offer without taking a trip to London. All you need is the latest version of Firefox. WebVR for Firefox is enabled by default on Windows, so simply open the web site and you can explore the virtual Glass Room with your headset and hand controls. Mac users with headsets can download Firefox Nightly for early access to WebVR.

As this revolutionary technology develops, there will be more opportunities to create interactive exhibits, like The Glass Room, in VR that tell immersive stories on the web.

Visit vr.mozilla.org to find more experiences we recommend in WebVR including A- Painter, a VR painting experience. If you’d like to learn more about the history and capabilities of WebVR, check out this Medium post by Sean White.